Tags

Augmented reality, Exhibitions, Junaio, Marketing, SCARLET project, Special Collections, Students, Teaching

I’m sooo excited about the potential of augmented reality (AR) for sharing collections. The possibilities are endless: enhanced exhibitions, artworks and displays brought to life, history trails, directions around a building, metadata …

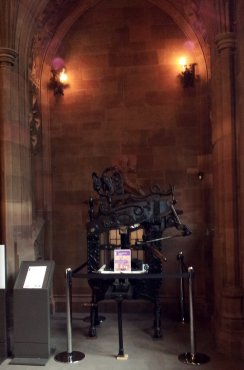

Columbia Printing Press in the John Rylands Library. The SCARLET team have experimented with using AR to explain the meaning and use of this press e.g. significance of the eagle on top.

The SCARLET project at the University of Manchester is particularly interesting. A JISC-funded partnership of special collections staff, academics and MIMAS tech people, it aims to create AR for special collections materials to enhance student learning.

The project’s creators had noticed something that resonates with me too: the disconnect between using special collections materials and using online information about them: “Students must consult rare books, manuscripts and archives within the controlled conditions of library study rooms. The material is isolated from the secondary, supporting materials and the growing mass of related digital assets”.

This disconnect is often seen positively by users, who value intense immersion in primary sources and contrast it with online whizzy superficiality. But it can be negative: “an alien experience for students familiar with an information-rich, connected wireless world”, a “barrier to their use of Special Collections” – they are missing out on material that could help them.

I went along to a SCARLET workshop on the 24th to find out more. I wanted to see what ideas I could pinch for Bradford and (with my RLUK hat on) how AR can help make the case for special collections in universities. The project aligns collections with university missions in several ways: better student experience, innovative teaching, building student skills for employment, showcasing academic research to demonstrate impact for the REF … It also offers ways to innovate with existing digitised material.

So how does it work?

Using the free Junaio app, users scan a “glue object” – an element of an object in Special Collections such as part of an illustration. They will then see whatever content has been linked to that element e.g. a video of their lecturer talking about the object. It’s really easy and intuitive to use and not (I gather) particularly challenging to set up. Some of the students who experienced the AR were not familiar with smartphones, apps etc, but they seem to have adapted quickly (Ipads were supplied by the library so that users did not have to own a device to see the AR). The pilot material, which we saw, used key editions of Dante’s Divine Comedy.

What have the SCARLET team learned from the project?

The original idea: students use the AR in special collections “show and tell” sessions. However, it worked better when students explored it independently after the sessions. Final year students found the content too basic but first years loved it – it helped raise their interest in the subject matter and keen to use more special collections.

As always, content rules! The AR must be interesting, new, and offer something unique. Just reproducing what students can already see on Blackboard is not enough. The content has to be as exciting as the tech or else the experience will be a let-down.

Of course, AR usefulness in higher education is not limited to special collections: examples suggested by the SCARLET team include medical/clinical work and landscape studies.

You can find out much more from the team’s blog, which includes demo objects. They will produce a toolkit later this year to help other libraries. I have so many ideas for using this at Bradford – hard to pick what to do and what might work best, so I will really appreciate the toolkit.

Pingback: Studying with SCARLET | Unique and Distinctive Collections

Very exciting project – thank you for alerting us to it, Alison.

Pingback: Scarlet Dissemination Workshop | SCARLET (Special Collections using Augmented Reality to Enhance Learning and Teaching)